Welcome back. Anthropic is on a roll — and they might just have made every AI agent a domain expert with Claude Skills. Skills is a powerful new feature that lets you train agents through simple folders that include instructions that are loaded as needed.

Want to learn how to use it? Here's a senior developer's step-by-step guide to using Claude Skills.

Today’s Insights

New models from Cognition and DeepSeek for devs

Engineering Leaders’ Guide to become a great mentor

The Ultimate n8n guide, OpenAI’s tutorial on building agents

Trending social posts, top repos, new research & more

Welcome to The Code. This is a 2x weekly email that cuts through the noise to help devs, engineers, and technical leaders find high-signal news, releases, and resources in 5 minutes or less. You can sign up or share this email here.

THIS WEEK IN PROGRAMMING

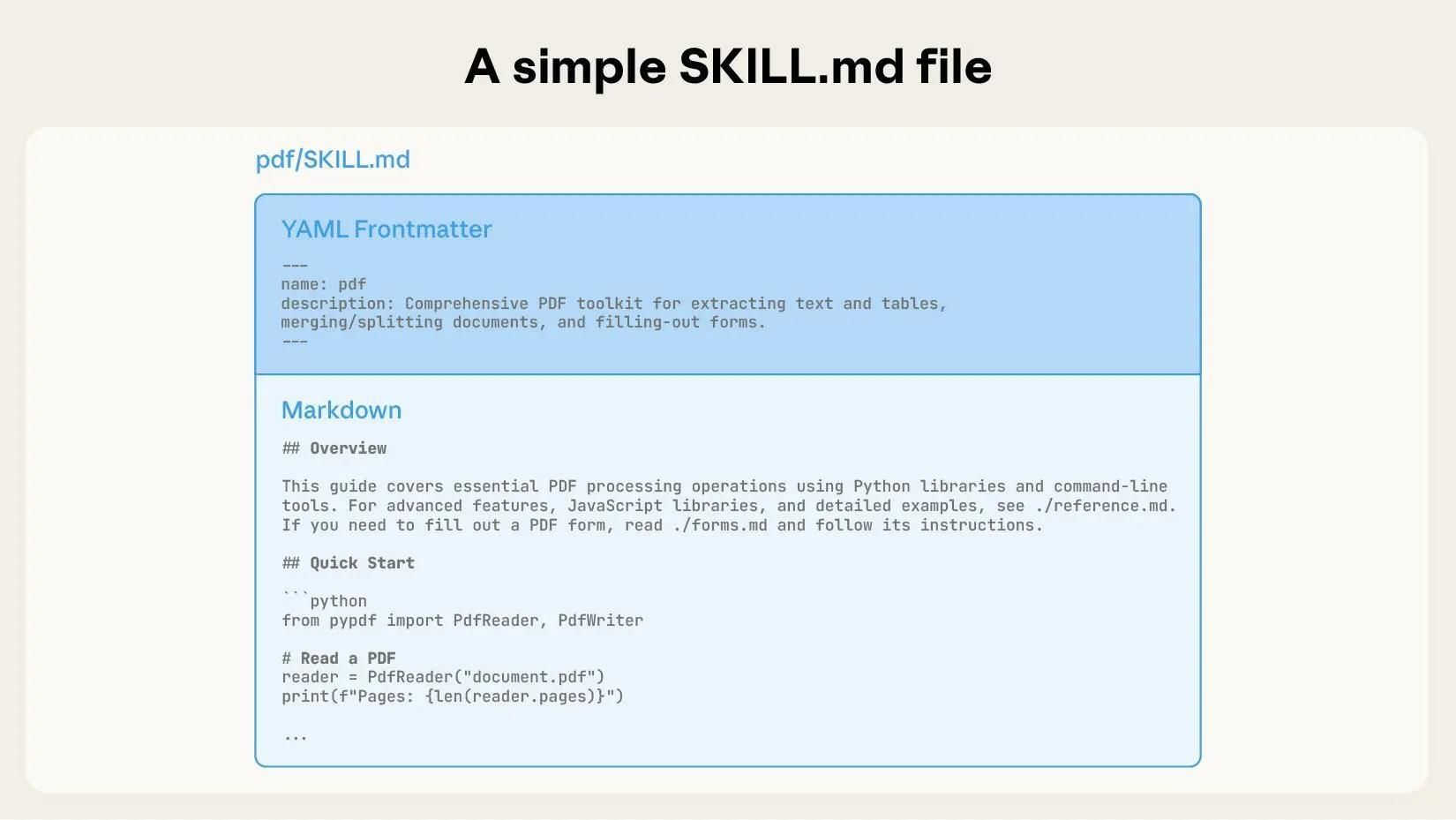

Anthropic launches Skills to help developers build specialized AI agents: Instead of building fragmented, custom agents for each use case, developers can now package their expertise into composable Skills — organized folders of instructions, scripts, and resources that Claude dynamically loads when needed. Here's a senior developer's step-by-step guide to using Claude Skills.

New models to cut down the time spent searching for relevant code context: Cognition and Windsurf just dropped SWE-grep and SWE-grep-mini, specialized models built to find the right code up to 20x faster than competing tools. Here's why it matters: coding agents typically waste over 60% of their time just searching for relevant context in large codebases. The new models fix this with parallel searches and smart retrieval. Here’s a tutorial on how to use it.

DeepSeek just released a 3B OCR model: The Chinese AI giant launched DeepSeek-OCR which enables developers to compress long contexts optically at 10× ratios with 97% precision using minimal vision tokens. The open-source model processes documents faster than competitors, helping engineers build scalable OCR pipelines and generate massive training datasets efficiently.

TRENDS & INSIGHTS

What Engineering Leaders Need to Know This Week

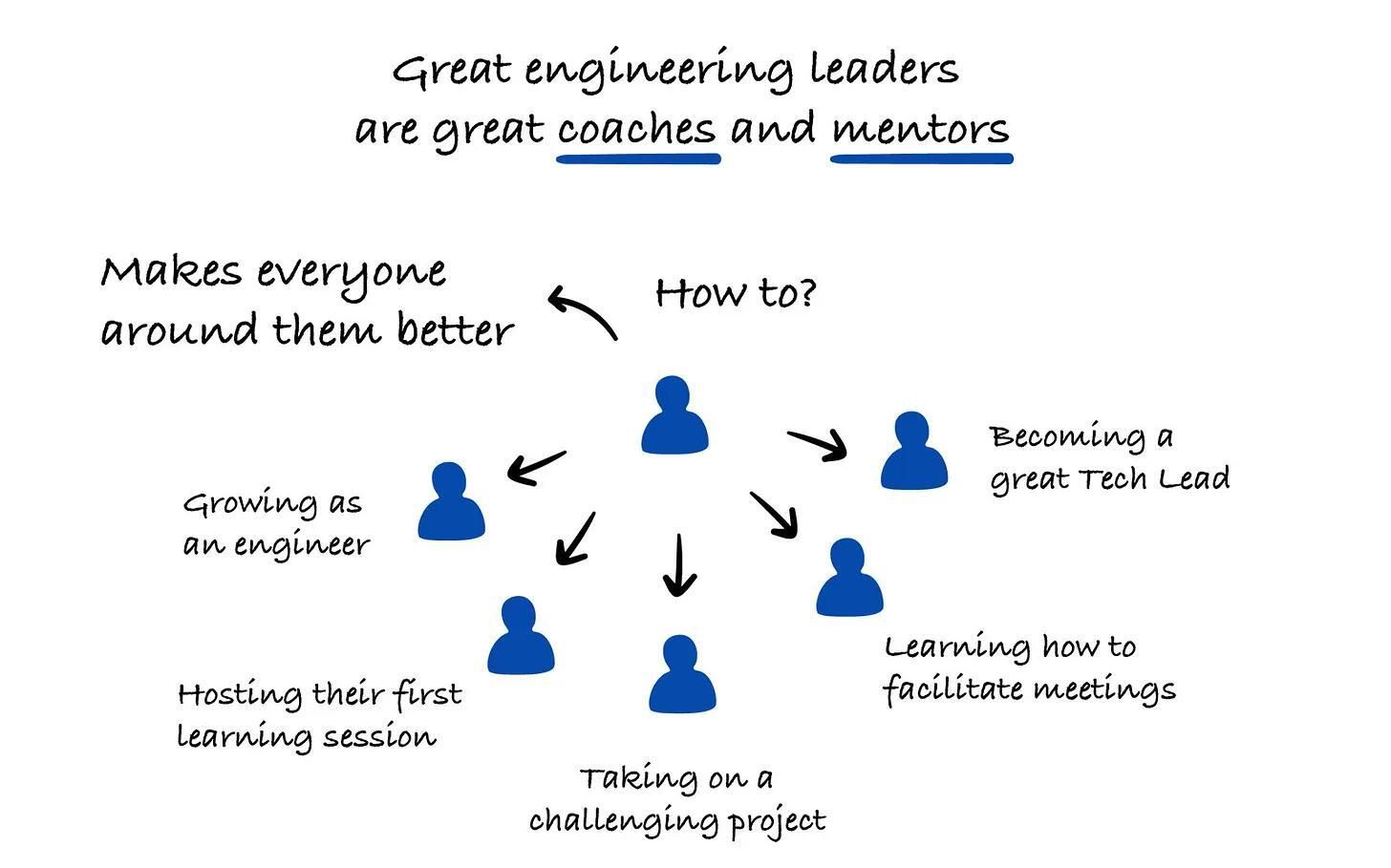

Engineering Leader’s Guide: How to Become a Great Coach and Mentor: A new guide shows why helping people grow matters more than technical skills for today's engineering leaders. With nearly 40% of employees wanting to switch jobs — mostly because of bad managers — leaders are trying new approaches like the Solution-Focused Model and CLEAR Method to make their one-on-one meetings actually useful.

Getting More Strategic: Cate Huston, engineering director and author, just published a deep dive into what makes leaders truly strategic — and why good strategy often goes unrecognized. She argues that effective strategy requires balancing four elements: time to think deeply, context to understand the situation, direction to set proximate objectives, and expertise to execute.

An Opinionated Guide to Using AI Right Now: Wharton professor Ethan Mollick just published his latest guide to navigating today's AI landscape, and it's perfectly timed. One standout tip: connect AI to your data sources like Gmail and calendars for dramatically better results.

IN THE KNOW

What’s trending on socials and headlines

Meme of the day

AI Ghosts: Andrej Karpathy says we’re building ghosts that mimic humans.

Unfair Advantage: How developers should use Claude sub-agents.

OG MCPs: A list of 5 MCP servers every developer should use for coding.

Quick Learn: This engineer explains 20 AI Concepts every developer should know.

AWS outage crashes Amazon, PrimeVideo, Fortnite, Perplexity and more.

X just rolled out a new API that gives developers sub-second access to profile updates across the platform.

Google presents Gemini CLI interactive shell, keeping workflows in context without switching terminals.

HuggingChat Omni allows you to route your messages between 100+ AI models to give you the best, cheapest & fastest answer possible.

TOP & TRENDING RESOURCES

3 Tutorials to Level Up Your Skills

OpenAI’s Codex Video Tutorials: Over the next two weeks, OpenAI is releasing a series of videos to help devs get better acquainted with Codex. It contains comprehensive hands on tutorials of integrating Codex with your existing IDEs.

The Ultimate n8n Starter Kit: The guide systematically builds your skills across n8n core concepts like trigger nodes, data transformations using expressions, and integrating third-party services through pre-built nodes or HTTP requests.

Building agents (OpenAI’s official guide): The ChatGPT maker launched a comprehensive developer guide for building AI Agents. The guide uses the new Responses API and Agents SDK, alongside built-in tools for web search, file search, code execution, and computer control.

Top Repos

Kubernetes for ML Engineers: This is a “just enough” step by step guide to help you understand the basics of Kubernetes.

claude-code-templates: It hosts ready-to-use templates and components to accelerate development with Claude Code.

Clone Wars: 100+ open-source clones of popular sites like Airbnb, Amazon, Instagram, Netflix, TikTok, Spotify, WhatsApp, Youtube etc.

Trending Papers

Meta introduces ScaleRL, a recipe for predictable RL training: The paper shows not all RL recipes scale equally. Meta presents a systematic RL scaling study over 400,000 GPU-hours. They fit compute-performance curves, analyze design choices, and propose ScaleRL, a recipe that predicts and improves large-scale RL for LLMs.

Stanford shows simple prompting boosts variety without retraining models: Stanford introduces Verbalized Sampling, a prompting method that bypasses alignment-driven mode collapse. It boosts diversity up to 2.1×, recovers base model variance, and improves quality without retraining or fine-tuning.

LLMs can get “Brain Rot”: Researchers demonstrate that continual pre-training of large language models on low-quality social media content causes persistent cognitive decline across reasoning, long-context understanding, and safety.

Whenever you’re ready to take the next step

What did you think of today's newsletter?

You can also reply directly to this email if you have suggestions, feedback, or questions.

Until next time — The Code team