Welcome back. OpenAI just went all-in on the security stack. They launched a benchmark to test how well AI agents exploit code vulnerabilities, and GPT-5.3-Codex is already scoring 72% on the benchmark.

Also: How to build agents from scratch using PI, how to code GPT in pure C, and get the best GitHub resources for leveling up your prompting skills.

Today’s Insights

Powerful new updates and hacks for devs

Why cognitive debt is the new technical debt

How to give Claude Code a 1M context window

Trending social posts, top repos, and more

Welcome to The Code. This is a 2x weekly email that cuts through the noise to help devs, engineers, and technical leaders find high-signal news, releases, and resources in 5 minutes or less. You can sign up or share this email here.

TODAY IN PROGRAMMING

OpenAI unveils benchmark for testing AI agents on smart contracts: The ChatGPT maker just released EVMbench, a new benchmark developed alongside Paradigm that tracks how effectively AI agents can find, fix, and exploit vulnerabilities in smart contracts. Their latest model, GPT-5.3-Codex, scored a 72.2% on exploit tasks, more than doubling GPT-5's performance from just six months ago. OpenAI is also putting up $10 million in API credits to support developers and security researchers using AI for defensive code audits.

Firecrawl gives AI agents their own secure browsers: The web data platform just launched Browser Sandbox. It gives agents secure, fully managed browser environments to handle forms, logins, and multi-page results with ease. Each session runs in an isolated container with Playwright pre-loaded, so engineering teams can scale to hundreds of parallel browsers without the headache of managing infrastructure. It's compatible with Claude Code, Codex, and other major platforms.

'Skill graphs' offer a fix for AI agents that lack domain depth: A standalone SKILL.md file might get the job done for simple automations, but developers are finding it lacks the technical depth and context needed for more complex tasks. That is why they are moving toward skill graphs — networks of small markdown files linked together. By using YAML metadata, agents can instantly pull the high-quality context they need for any specific task. If you are looking to build your own, there is an open-source plugin that generates a starter set of about 250 connected files.

PRESENTED BY DELVE

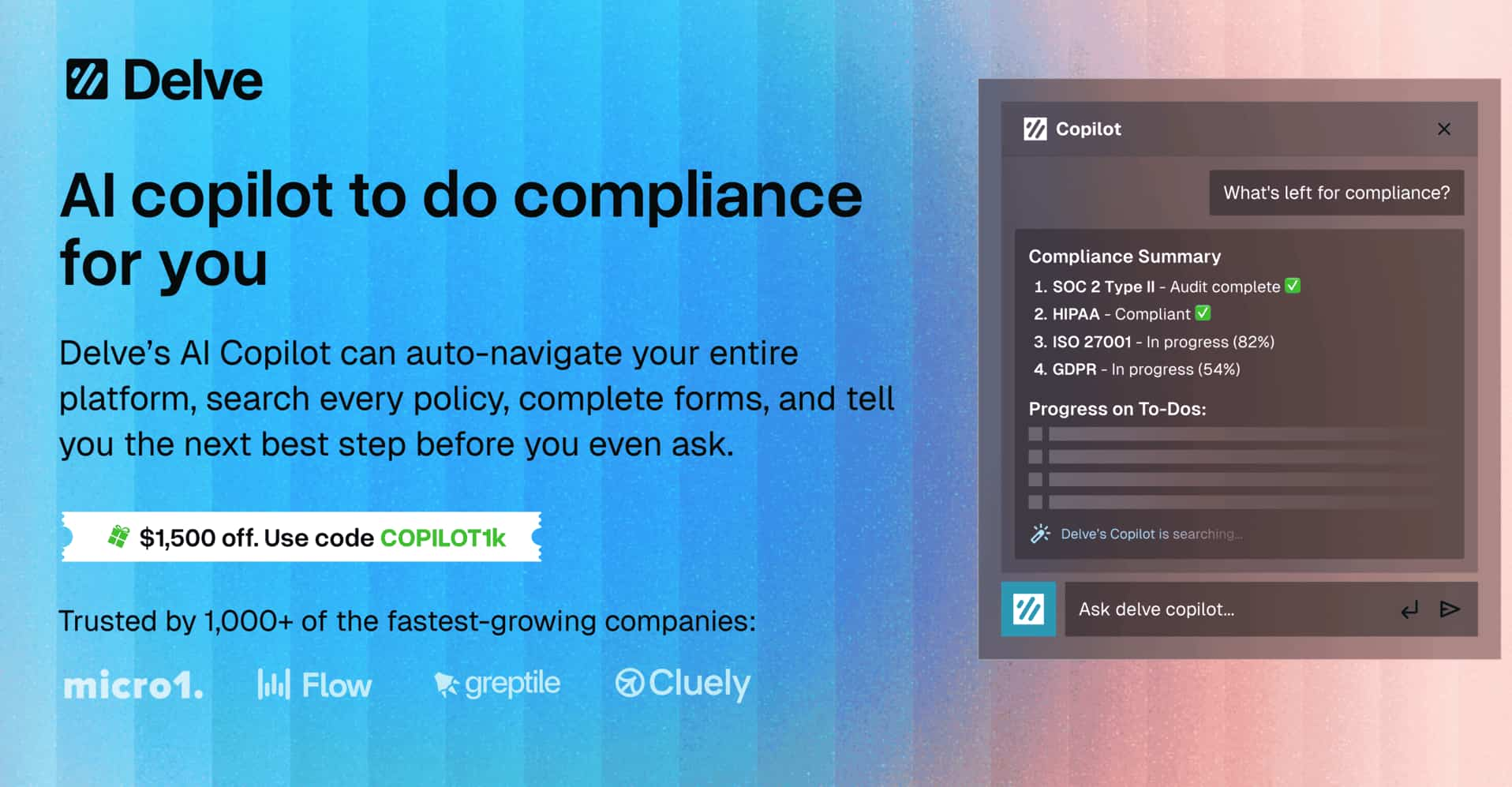

Ever been tired, stuck, and miserably documenting something it feels like AI was born to do? AI finally delivered.

Delve's AI copilot understands your entire tech stack and tells you exactly what to do next. Just ask it "What's my next step? Take me there" and watch Copilot read your screen and start doing tasks for you. Congrats, you've found the light at the end of the tunnel. Join Wispr Flow, 11x, Bland, and more in the future of compliance with Delve.

Book a demo here to get $1,500 off compliance and see Copilot in action with code COPILOT1k

INSIGHT

Why cognitive debt is the new technical debt

Source: The Code, Superhuman

Experts are struggling to keep up. Django co-creator Simon Willison recently realized he’d lost the mental model for his own projects, finding it increasingly difficult to wrap his head around every new feature. This gap in understanding, known as cognitive debt, continues to grow when teams prioritize shipping code over truly grasping its underlying complexities.

Data backs it up. A four-month MIT Media Lab study tracked this phenomenon and found that LLM users exhibited weaker brain connectivity. In many cases, these individuals even struggled to accurately quote their own AI-assisted work. Although they initially blamed messy code, the real issue was that no one on the team could explain their original design decisions.

The solution is structural. Former Vercel engineer Matt Pocock believes teams should prioritize understanding the codebase through interfaces instead of getting caught up in build details. He suggests using "grey box modules" with clear contracts where humans design the interfaces and AI handles the implementation. This abstraction simplifies management. To keep everyone aligned, he suggests pair programming, AI-generated weekly reports, and using RFCs for changes.

Risk isn't evenly distributed. Mid-level engineers are particularly vulnerable to cognitive debt because they established their technical foundations before the rise of LLMs and often lack the architectural intuition needed to oversee them effectively. Software developer Martin Fowler breaks down these specific risks across every seniority level, providing a framework for successfully pairing humans with AI agents while maintaining a clear, shared understanding of the system.

IN THE KNOW

What’s trending on socials and headlines

Meme of the day.

Agent Building Blocks: Stop relying on closed-source agent frameworks. This guide shows you how to build your own from scratch using PI.

GPT from Scratch: A developer built a working GPT model in pure C without libraries or dependencies. Must read for devs who want to truly understand how transformers work.

Prompt School: This viral post compiles the best GitHub resources to master prompting and LLM fundamentals.

Scale Smart: Learn how sharding helps scale your database because it's way more efficient than copying it over and over.

Dogfooding Codex: OpenAI's Codex team shared automations and skills they use on their own code.

Cursor 2.5 brings past conversation memory and .agents/skills support to your coding agents.

Cohere Labs releases Tiny Aya, an open-weight model that supports over 70 languages and is small enough to run directly on your phone.

GitHub adds Claude Sonnet 4.6 to Copilot. It is available across all plans and IDEs, and it is great for search and agentic coding.

AI CODING HACK

How to give Claude Code a 1M token context window

Claude Code's auto-compaction feature triggers as soon as your context window fills up, which often results in losing critical progress. Key decisions, your debugging history, and the overall architectural context can simply vanish.

However, a developer named Melvyn has shared a straightforward, two-step configuration override that switches Claude Code to Sonnet 4.6 with a massive 1M token context window.

Open .claude/settings.json and add these environment variables:

"env": {

"ANTHROPIC_DEFAULT_HAIKU_MODEL": "claude-sonnet-4-6-1m",

"ANTHROPIC_DEFAULT_SONNET_MODEL": "claude-sonnet-4-6-1m"

}This override switches both model slots to the 1M context version, ensuring that every call during your session utilizes the full context window.

Simply type /model and select Sonnet, then run /context to verify the update. You should see roughly 30k out of 1M tokens used, leaving over 900k tokens free.

TOP & TRENDING RESOURCES

Top Tutorial

How to create a deep research multi agent: This Python tutorial walks you through building a deep research multi-agent system using open-source LLMs. It covers everything from mapping out research plans to handling subtasks with structured outputs. You'll also learn how to pull in Firecrawl via MCP tools, allowing a coordinator agent to wrap it all up into a solid final report.

Top Repo

Pilot: It runs Claude Code tasks on its own, automating your quality control by ensuring all tests and checks pass before any code ever touches your repo while keeping all the context right where it needs to be.

Trending Paper

Measuring AI agent autonomy (by Anthropic): We know surprisingly little about how much autonomy people actually give AI agents in real world settings. This research reveals that as users gain experience, they grant agents more independence, shifting from step-by-step approvals to intervening only when necessary.

Grow customers & revenue: Join companies like Google, IBM, and Datadog. Showcase your product to our 150K+ engineers and 100K+ followers on socials. Get in touch.

Whenever you’re ready to take the next step

What did you think of today's newsletter?

You can also reply directly to this email if you have suggestions, feedback, or questions.

Until next time — The Code team